Robust Planning with Large Language Models (LLMPlan)

Our project contributes to bridging the gap between learning and reasoning by developing a system that uses a large language model to automatically extract symbolic planning models from unstructured natural language input and then uses the models for goal-directed planning. By combining the flexibility and simplicity of language models with the speed and reliability of planners, our system will allow non-experts to find trustworthy plans for real-world applications.

Large language models (LLMs) such as the GPT model family are among the most important breakthroughs in recent AI history (Brown et al. 2020; Ouyang et al. 2022; OpenAI 2023a; OpenAI 2023b). They have made significant advancements in many natural language processing (NLP) tasks like sentiment analysis, text classification, and machine translation. These success stories have prompted researchers to test whether LLMs also have arithmetic and logical reasoning capabilities (e.g., Kojima et al. 2022; Wei et al. 2022), often with impressive positive results. In a talk about a recent paper (Bubeck et al. 2023), the first author even suggests that GPT-4 has six of the seven traits commonly associated with intelligence (Gottfredson 1997): it can reason, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience (Bubeck 2023). In his view, the only missing trait is the ability to plan, i.e., to find a goal-achieving sequence of actions (Ghallab, Nau, and Traverso 2004). The aim of our project is to give LLMs this capability.

There are several approaches that prompt an LLM with a natural language description of a planning task and let the model return a suitable action sequence (Feng, Zhuo, and Kambhampati, n.d.; Olmo, Sreedharan, and Kambhampati 2021; Huang et al. 2022). However, for a robust and trustworthy system such a direct mapping is too brittle. First, it assumes that LLMs can reason about complex actions and change, which is still out of reach for current LLMs (Valmeekam et al. 2022; Bubeck et al. 2023). For example, even GPT-4 fails to solve the simple planning task where three blocks, A, B and C, need to be arranged from A and B on the table and C atop A, into a single stack with A atop B and B atop C (Sussman 1973). Second, the learned mapping is usually extremely hard to understand and debug, since it is commonly encoded in a deep neural network.

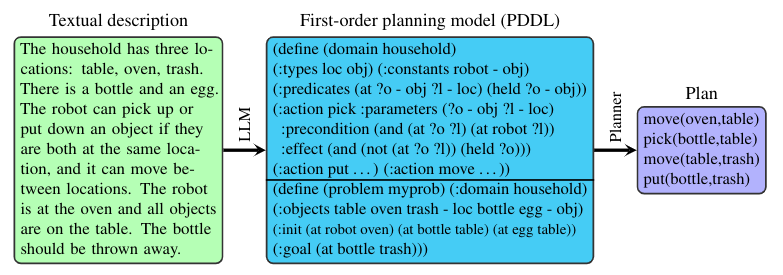

To overcome these issues, we will divide the process into two steps: given natural language text, we will first extract a symbolic, first-order model of the planning task, and then solve it with an off-the-shelve planner (see figure below). This two-step approach has two main benefits. First, it gives users access to the learned planning model and thus allows them to inspect and understand what the system learned. Second, instead of hoping that the LLM exhibits planning capabilities, we let it focus on its strength, knowledge extraction, and use a planner to do the planning efficiently.

As the target language for representing the planning task, we will use the planning domain definition language (PDDL) (McDermott et al. 1998). It allows to separate information about the general planning domain (e.g., predicates, actions) from the specific planning task (e.g., initial state, goals). Currently, automated planning can only be applied in simple domains, where specifying the PDDL model of the domain is not too complicated. However, real-world planning tasks, such as the ones that arise in industry, usually stem from domains where writing models manually is tedious, error-prone, and often simply infeasible.

In many of these scenarios, domain descriptions are readily available in natural language, though. For example, process manuals outline which actions should be executed in certain events, and user manuals describe how people can interact with a system. Even where such natural language descriptions are unavailable, it is often easy for domain experts (without a planning background) to produce such texts. Most importantly, the information might already be contained within the LLM.

At the end of our project, we will have developed a system that can automatically distill this information into planning models and solve them with off-the-shelf planners. This will allow practitioners to adopt automated planning in scenarios where it was previously infeasible to write the necessary domain models. This outcome is highly desirable since it means that practitioners can use automated planners and benefit from understandable and extendable planning models, instead of having to find solutions for their planning tasks manually or with dedicated code.

PI: Jendrik Seipp

Core team: Elliot Gestrin, Marco Kuhlmann

Funding: This project is supported by the CUGS Graduate School in Computer Science at Linköping University.